Section: New Results

Dynamic Reconfiguration of Feature Models

Participants : Sabine Moisan, Jean-Paul Rigault.

Keywords: feature models, model at run time, self-adaptive systems

In video understanding systems, context changes (detected by system sensors) are often unexpected and can combine in unpredictable ways, making it difficult to determine in advance (off line) the running configuration suitable for each context combination. To address this issue, we keep, at run time, a model of the system and its context together with its current running configuration. We adopted an enriched Feature Model approach to express the variability of the architecture as well as of the context. A context change is transformed into a set of feature modifications (selection/deselection of features) to be processed on the fly. This year we proposed a fully automatic mechanism to compute at run time the impact of the current selection/deselection requests. First, the modifications are checked for consistency; second, they are applied as a single atomic “transaction” to the current configuration to obtain a new configuration compliant with the model; finally, the running system architecture is updated accordingly. This year we implemented the reconfiguration step and its algorithms and heuristics and we evaluated its run time efficiency.

Our ultimate goal is to control the system through a feed back loop from video components and sensor events to feature model manipulation and back to video components modifications.

The fully automatic adaptation that we propose is similar to a Feature Model editor. That is the reason why our previous attempt was to embed a general purpose feature model editor at run time. This revealed two major differences between our mechanism and an editor. First, in a fully automatic process there is no human being to drive a series of edits, hence heuristics are required. Second, the editor operations are often elementary while we need a global “transaction-like” application of all the selections/deselections to avoid temporary unconsistencies.

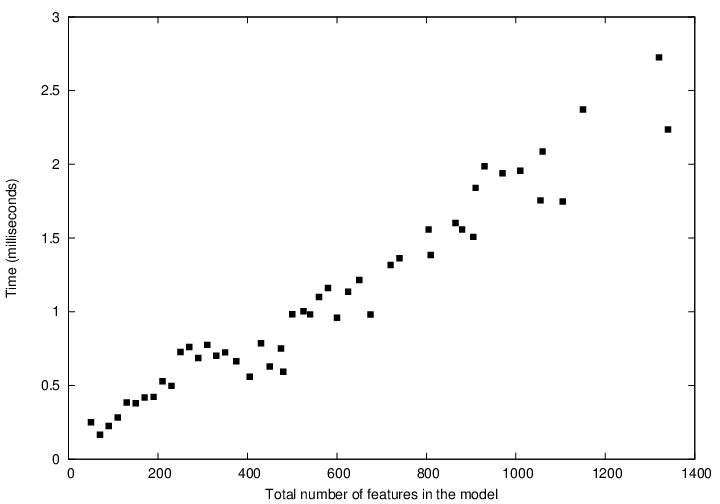

In order to evaluate our algorithm performance, we randomly generated feature models (from 60 to 1400 features). We also randomly generated context changes. The results are shown on figure 24: no processing time explosion is noticeable; in fact the time seems to grow rather linearly. Moreover, the computation time of a new initial partial configuration does not exceed 3ms for a rather big model. The algorithm and its evaluation are detailed in [41].